- Gem5 Performance Model.

- Step 1 Build benchmarks

- Step 2 Build gem5

- Step 3 Read gem5 config script

- System Model

- Step 4 Run gem5

- Analyze Trace 0 : TimingCPU + Inf Memory

- Analyze Trace 1 : TimingCPU + SingleCycle

- Analyze Trace 2: TimingCPU + Cache

- Analyze Trace 3: MinorCPU + Multi-cycle ALU

- Analyze Trace 4: OOO + Cache

- gdb gem5

- Acknowledgement

Videos

Gem5 Performance Model.

In this document we try to understand how gem5 models the performance of systems. We trace the events occurring within the simulator to try and understand gem5 internals.

git clone git@github.com:CMPT-7ARCH-SFU/gem5-lab.git

cd gem5-lab

| Folder | Description |

|---|---|

| gem5-config | Python scripts for setting up system and running gem5 simulation |

| benchmarks | Benchmarks that we will be running on top of gem5 |

Step 1 Build benchmarks

For this lab we have chosen the RISC-V ISA and use simple hand written assembly to control the instructions being modelled. Typically with a C/C++ program the compiler will include a ton of other code related to the C runtime and libc making it hard to obtain a full trace of events. With small assembly microbenchmarks we can obtain complete execution traces for analysis.

# Load modules

$ source /data/.local/modules/init/zsh

# If you are running this on CMPT 7ARCH machines then you should not have to module load

# Path is hardcoded in the makefile

module load rv-newlib # Check that riscv64 compiler suite is in your path.

cd benchmarks

make

Step 2 Build gem5

Gem5 is a large piece of software (Builds is typically 10s of GB). Typically we do not include the source in the gem5-lab or gem5-ass. It is also included in the .gitignore so that you do not check it in by mistake. So go ahead clone and build it.

Use the preinstalled gem5 if your disk space quota is a problem for building gem5

This applies only to the labs and assignments. For final projects, you may need to request extra quota

and build the gem5 binaries yourself

# gem5 comes preinstalled at /data/gem5-baseline

export M5_PATH=/data/gem5-baseline

DO NOT BUILD. FOR LABS AND ASSIGNMENTS USE PRE-INSTALLED ABOVE

Follow build instructions below only after you have requested atleast 35G of disk space from the

helpdesk@cs.sfu.ca. Only applies to those doing projects on gem5

Build instructions

git clone git@github.com:CMPT-7ARCH-SFU/gem5-baseline.git

cd gem5-baseline

## Stop git tracking large file changes. Add --global if you want to turn off for all.

git config oh-my-zsh.hide-info 1

# First build might take a long time

# on 10 cores it may take 15 minutes

# Open a tmux session.

scons -j 8 build/RISCV/gem5.opt CPU_MODELS='AtomicSimpleCPU,O3CPU,TimingSimpleCPU,MinorCPU' --gold-linker

Step 3 Read gem5 config script

The gem5-configuration scripts are included in the gem5-config folder. There are two files. run-micro.py and system.py.

$ ls gem5-config/

run-micro.py

system.py

| File | Description |

|---|---|

| run-micro.py | Specifying the cpu model to be used, interacting with the simulation, dumping stats, etc. |

| system.py | Putting together the system. Connecting the ports between the cpu and memory models |

Lets inspect run-micro.py to understand what’s happening.

Open run-micro.py in your favorite editor. We are only showing code snippets.

- Classes defining function unit parameters. Multiple FU classes are defined. A CPU needs to include all FU types to be able to run different types of programs. Gem5 gives you the option of stripping out some of the FUs if your target benchmarks only include a few instruction types e.g., if you are only running Int benchmarks you can discard FP FU pool. If you are not running any programs with SIMD operations you can discard that FU.

class IntALU(FUDesc):

opList = [ OpDesc(opClass='IntAlu',opLat=1) ]

count = 32

class IntMultDiv(FUDesc):

opList = [ OpDesc(opClass='IntMult', opLat=1),

OpDesc(opClass='IntDiv', opLat=20, pipelined=False) ]

if buildEnv['TARGET_ISA'] in ('x86'):

opList[1].opLat=1

count=32

There are three parameters that we care about

| Parameter | Description |

|---|---|

| opClass | Each instruction in your program specifies an opClass. You can find this defined in .isa files under gem5/arch/riscv/isa/decoder.isa. Look for Op. By default everything is IntAlu unless specified. Here we are defining the opClass so that the instructions when decoded can be forwarded to the appropriate queue. You can have more than one function unit with the same opClass |

| opLat | This specifies the latency of the function unit. An instruction. The integer number is in clock ticks. e.g., IntAlu the latency is 1 cycle. IntDiv the latency is 20 cycles, unless x86 in which case Div is 1 cycle. This is because when ISA is x86 the latency here is the latency of the micro ops |

| pipelined | This specifies the throughput i.e., delay between subsequent operations issued to the same function unit. e.g., intDiv pipelined=false means back-to-back operations can only be issued 20 cycles apart |

The FU list is initialized for all CPUs (exception is TimingSimple). TimingSimple assumes each instruction is single cycle and does not model the function units. To account for operations that take more than one cycle, we need to define functional units that inherit the MinorFU interface provided in Gem5.

class Minor4CPU(MinorCPU):

...

fuPool = Minor4_FUPool()

class O3_W256CPU(DerivO3CPU):

.....

fuPool = Ideal_FUPool()

Figure below shows the system performance model. Each CPU include multiple FU classes. Each FUclass includes multiple FUs. Each FU is a queue (if pipelined) the throughput is 1 cycle (if pipelined). The latency is set by OpLat.

- CPU configuration. Each CPU includes a configuration

class O3_W2KCPU(DerivO3CPU):

branchPred = BranchPredictor()

fuPool = Ideal_FUPool()

fetchWidth = 32

decodeWidth = 32

renameWidth = 32

dispatchWidth = 32

issueWidth = 32

wbWidth = 32

commitWidth = 32

squashWidth = 32

fetchQueueSize = 256

LQEntries = 250

SQEntries = 250

numPhysIntRegs = 1024

numPhysFloatRegs = 1024

numIQEntries = 2096

numROBEntries = 2096

We are only setting a few of the parameters and leaving the others to the default values. We typically do not modify the Delay parameters and only modify the buffer and width parameters. Here is the description of some of the parameters. If you wish to know all the parameters follow along with class lectures. We include a figure here to illustrate.

These parameters control the CPU front and backends. You can find parameters definitions for the O3CPU under $M5_PATH/src/cpu/o3/O3CPU.py. Similarly for minor you can find under $M5_PATH/src/cpu/minor/MinorCPU.py

System Model

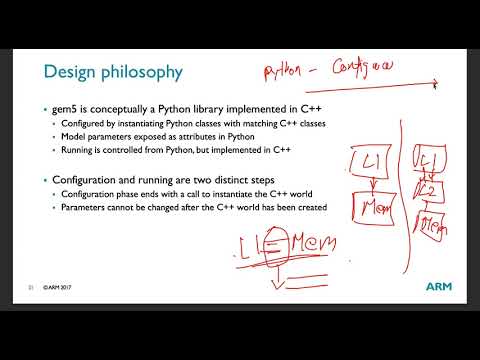

The two main components of the gem5 system model that we will deal with are the CPU model and the Memory model. gem5 is highly configurable permitting any cpu model to be connected to any other memory model. Here we show 3 examples

-

An OOO CPU connected to Inf memory model i.e., No cache misses. All memory accesses complete instantaneously.

-

An OOO CPU model connected to a L1 instruction and data cache, and a two-level hierarchy (includes shared L2), with detailed memory controller (different DDR parameters can be set in MemCtrl)

CPU Models.

gem5 has a few CPU models for different purposes.

In gem5-config/run-micro.py, those are the first command line parameter option.

The information to make highly accurate models isn’t generally public for non-free CPUs, so either you must either rely vendor provided models or on experiments/reverse engineering.

There is no simple answer for “what is the best CPU”, in theory you have to understand each model and decide which one is closer your target system.

| CPU | 1-liner |

|---|---|

AtomicSimpleCPU |

the default one. All operations (including memory accesses) happen instantaneously. The fastest simulation, but not realistic at all. |

TimingSimpleCPU |

Non-Memory instructions operate in 1 cycle. Memory instructions depend on the memory model. See Memory model |

MinorCPU |

Generic In-order Multi-issue core. Honors all dependencies (true and false). Can issue parallel instructions. Fixed 4-stage pipeline. |

DerivO3CPU |

Most detailed OOO core in gem5. |

Classic RISC pipeline

https://en.wikipedia.org/wiki/Classic_RISC_pipeline

gem5’s minor cpu implements a similar but 4 stage pipeline. Minor CPU was initially designed to mimic this, but then as folks started adding bells and whistles it morphed into a in-order and OOO combination.

Superscalar processor

https://en.wikipedia.org/wiki/Superscalar_processor

http://www.lighterra.com/papers/modernmicroprocessors/ explains it well.

You basically decode multiple instructions in one go, and run them at the same time if they can go in separate functional units and have no conflicts.

And so the concept of branch predictor must come in here: when a conditional branch is reached, you have to decide which side to execute before knowing for sure.

This is why it is called a type of instruction-level-parallelism.

Although this is a microarchitectural feature, it is so important that it is publicly documented. For example:

Execution unit

gem5 calls them “functional units”.

gem5 has execution-unit,functional units explicitly modelled as shown at gem5-functional-units, and those are used by both gem5-minorcpu and gem5-derivo3cpu. (TimingSimpleCPU does not model the latency of function units; every instruction completes in 1 cycle)

Each instruction is marked with a class, and each class can execute in a given [execution-unit,functional unit].

Which units are available is visible for example on the «gem5-config-ini» of a «gem5-minorcpu» run. Functional units are not present in simple CPUs like «gem5-timingsimplecpu».

export M5_PATH=/data/gem5-baseline

$M5_PATH/build/RISCV/gem5.opt --redirect-stdout --debug-flags=Event,ExecAll,FmtFlag --debug-file=trace.out --debug-start=1000 --debug-end=1000 ./gem5-config/run_micro.py Minor4 SingleCycle ./benchmarks/hello.riscv.bin --clock 1GHz

For example, m5out/config.ini of a minor run:

contains:

....

[system.cpu]

type=MinorCPU

children=branchPred dcache dtb executeFuncUnits icache interrupts isa itb power_state tracer workload

....

executeInputWidth=2

executeIssueLimit=2

....

Here also note the executeInputWidth=2 and executeIssueLimit=2 suggesting that this is a dual-issue superscalar processor.

The system.cpu points to:

[system.cpu.executeFuncUnits]

type=MinorFUPool

children=funcUnits0 funcUnits1 funcUnits2 funcUnits3 funcUnits4 funcUnits5 funcUnits6 funcUnits7

and the two first units are in full:

[system.cpu.executeFuncUnits.funcUnits0]

type=MinorFU

children=opClasses timings

opLat=3

[system.cpu.executeFuncUnits.funcUnits0.opClasses]

type=MinorOpClassSet

children=opClasses

[system.cpu.executeFuncUnits.funcUnits0.opClasses.opClasses]

type=MinorOpClass

opClass=IntAlu

and:

[system.cpu.executeFuncUnits.funcUnits1]

type=MinorFU

children=opClasses timings

opLat=3

[system.cpu.executeFuncUnits.funcUnits1.opClasses]

type=MinorOpClassSet

children=opClasses

[system.cpu.executeFuncUnits.funcUnits1.opClasses.opClasses]

type=MinorOpClass

opClass=IntAlu

So we understand that:

- the first and second functional units are

IntAlu - both have a latency of 3 cycles

- each functional unit can have a set of

opClasswith more than one type. Those first two units just happen to have a single type.

The full list is:

- 0, 1:

IntAlu,opLat=3 - 2:

IntMult,opLat=3 - 3:

IntDiv,opLat=9. So we see that a more complex operation such as division has higher latency. - 4:

FloatAdd,FloatCmp, and a gazillion other floating point related things.opLat=6. - 5:

SimdPredAlu: Vector predication unitopLat=3 - 6:

MemRead,MemWrite,FloatMemRead,FloatMemWrite.opLat=1 - 7:

IprAccessInterrupt Priority Register access,InstPrefetch, instruction prefetching unit.opLat=1

The top-level configuration can be found in gem5-config/run-micro.py

The complete list can be found in $M5_PATH/src/cpu/minor/MinorCPU.py:

class MinorDefaultFUPool(MinorFUPool):

funcUnits = [MinorDefaultIntFU(), MinorDefaultIntFU(),

MinorDefaultIntMulFU(), MinorDefaultIntDivFU(),

MinorDefaultFloatSimdFU(), MinorDefaultPredFU(),

MinorDefaultMemFU(), MinorDefaultMiscFU()]

In Gem5, each instruction has a certain opClass that determines on which unit it can run. For example: class AddImm, which is what we get on a simple add x1, x2, 1, sets itself as an IntAluOp on the constructor as expected:

AddImm::AddImm(ExtMachInst machInst,

IntRegIndex _dest,

IntRegIndex _op1,

uint32_t _imm,

bool _rotC)

: DataImmOp("add", machInst, IntAluOp, _dest, _op1, _imm, _rotC)

we see:

[system.cpu]

type=DerivO3CPU

children=branchPred dcache dtb fuPool icache interrupts isa itb power_state tracer workload

and following fuPool:

[system.cpu.fuPool]

type=FUPool

children=FUList0 FUList1 FUList2 FUList3 FUList4 FUList5 FUList6 FUList7 FUList8 FUList9

so for example FUList0 is:

[system.cpu.fuPool.FUList0]

type=FUDesc

children=opList

count=6

eventq_index=0

opList=system.cpu.fuPool.FUList0.opList

[system.cpu.fuPool.FUList0.opList]

type=OpDesc

eventq_index=0

opClass=IntAlu

opLat=1

pipelined=true

and FUList1:

[system.cpu.fuPool.FUList1.opList0]

type=OpDesc

eventq_index=0

opClass=IntMult

opLat=3

pipelined=true

[system.cpu.fuPool.FUList1.opList1]

type=OpDesc

eventq_index=0

opClass=IntDiv

opLat=20

pipelined=false

So summarizing all units we have:

- 0, 1:

IntAluwithopLat=3 - 2:

IntMultwithopLat=3andIntDivwithopLat=20 - 3:

FloatAdd,FloatCmp,FloatCvtwithopLat=2

Step 4 Run gem5

Run TimingSimple

export M5_PATH=/data/gem5-baseline

cd $REPO

$M5_PATH/build/RISCV/gem5.opt --redirect-stdout --outdir=Simple_m5out --debug-flags=Event,ExecAll,FmtFlag --debug-file=trace.out --debug-start=1000 --debug-end=1000 ./gem5-config/run_micro.py Simple SingleCycle ./benchmarks/hello.riscv.bin --clock 1GHz

# Pay attention to how the clock is set, case matters

| Description | |

|---|---|

| –outdir | The output directory |

| –redirect-stdout | redirecting the stdout of the program simulated by gem5. |

| –debug-start, –debug-end | Cycle tick window for which tracing is switched on. ALWAYS USE FOR PROGRAMS OUTSIDE THE LAB |

| ./gem5-config/run_micro.py | Top-level script. Sets up the simulation model and interacts with simulator events |

| simple Inf hello.riscv.bin –clock 2GHz | Parameters passed to the simulation script |

| hello.riscv.bin | The binary to be simulated |

Generate O3

export M5_PATH=/data/gem5-baseline

$M5_PATH/build/RISCV/gem5.opt --outdir=O3_m5out --redirect-stdout --debug-flags=O3PipeView --debug-file=trace.out ./gem5-config/run_micro.py DefaultO3 Slow ./benchmarks/array.riscv.bin

| Description | |

|---|---|

| –debug-flags=O3PipeView –debug-file=trace.out | Dumps trace.out in O3_m5out/ that can be read by Konata and O3 pipeviewer. |

| –redirect-stdout | redirecting the stdout of the program simulated by gem5. |

| –debug-start, –debug-end | Cycle tick window for which tracing is switched on. ALWAYS USE FOR PROGRAMS OUTSIDE THE LAB |

| ./gem5-config/run_micro.py | Top-level script. Sets up the simulation model and interacts with simulator events |

| simple Inf hello.out –clock 2GHz | Parameters passed to the simulation script |

| hello.riscv.bin | The binary to be simulated |

| –debug-flags, | Arguments passed to the binary. Switches on tracing |

Event Driven Simulation

gem5 is an event based simulator, and as such the event queue is of the crucial elements in the system. Every single action that takes time (e.g. notably reading from memory) models that time delay by scheduling an event in the future.

The gem5 event queue stores one callback event for each future point in time.

The event queue is implemented in the class EventQueue in the file src/sim/eventq.hh.

Not all times need to have an associated event: if a given time has no events, gem5 just skips it and jumps to the next event: the queue is basically a linked list of events.

Important examples of events include:

-

CPU ticks

-

peripherals and memory

At gem5 event queue AtomicSimpleCPU syscall emulation freestanding example analysis we see for example that at the beginning of an AtomicCPU simulation, gem5 sets up exactly two events:

-

the first CPU cycle

-

one exit event at the end of time which triggers gem5 simulate() limit reached

-

Then, at the end of the callback of one tick event, another tick is scheduled.

-

And so the simulation progresses tick by tick, until an exit event happens.

# start event

0: Event: system.cpu.wrapped_function_event: EventFunctionWrapped 17 scheduled @ 0

0: Event: Event_21: generic 21 scheduled @ 0

# Timeout event. At this cycle, gem5 forcefully stops

0: Event: Event_21: generic 21 rescheduled @ 18446744073709551615

0: Event: system.cpu.wrapped_function_event: EventFunctionWrapped 17 executed @ 0

...

Program exits

9573000: ExecEnable: system.cpu: A0 T0 : @m5_work_end : M5Op : IntAlu : D=0x0000000000000000 flags=(IsInteger|IsSerializeAfter|IsNonSpeculative)

Analyze Trace 0 : TimingCPU + Inf Memory

export M5_PATH=/data/gem5-baseline

## Run TimingCPU with Inf Memory (0 latency, Inf capacity)

$M5_PATH/build/RISCV/gem5.opt --redirect-stdout --debug-start=0 --debug-end=60000 --debug-file=trace.out --outdir=Inf_m5out --debug-flags=Event,ExecAll,FmtFlag ./gem5-config/run_micro.py Simple Inf ./benchmarks/hello.riscv.bin --clock 1GHz

$ cat Inf_m5out/trace.out

# Every instruction completes instantaneously

0: ExecEnable: system.cpu: A0 T0 : @_start : addi a0, zero, 1 : IntAlu : D=0x0000000000000001 flags=(IsInteger)

0: Event: system.mem_ctrl.wrapped_function_event: EventFunctionWrapped 9 scheduled @ 0

0: Event: system.mem_ctrl.wrapped_function_event: EventFunctionWrapped 9 executed @ 0

0: Event: Event_14: Timing CPU icache tick 14 scheduled @ 0

0: Event: Event_14: Timing CPU icache tick 14 executed @ 0

0: ExecEnable: system.cpu: A0 T0 : @_start+4 : addi a1, zero, 0 : IntAlu : D=0x0000000000000000 flags=(IsInteger)

In this mode every instruction completes instantaneously with 0 latency

Analyze Trace 1 : TimingCPU + SingleCycle

cd benchmarks # first 3 lines needed if you haven't already compiled benchmarks

make

cd ..

export M5_PATH=/data/gem5-baseline

$M5_PATH/build/RISCV/gem5.opt --redirect-stdout --debug-start=0 --debug-end=60000 --debug-file=trace.out --outdir=Simple_m5out --debug-flags=Event,ExecAll,FmtFlag ./gem5-config/run_micro.py Simple SingleCycle ./benchmarks/hello.riscv.bin --clock 1GHz

1000: ExecEnable: system.cpu: A0 T0 : @_start : addi a0, zero, 1 : IntAlu : D=0x0000000000000001 flags=(IsInteger)

...

2000: ExecEnable: system.cpu: A0 T0 : @_start+4 : addi a1, zero, 0 : IntAlu : D=0x0000000000000000 flags=(IsInteger)

...

- hello.riscv.bin contains no memory operations (even though assembly may seem to show). All psuedo ops map to integer ops.

- Running with single cycle 1000ps (or 1ns) for each memory access. See

line:49 gem5-config/system.py. - Every instruction requires one memory accesses for reading the instruction itself.

Remember. instructions are also data. Hence every non-memory operation completes in 1000ps.

Check yourself:

- Change line 49 in

system.pyfrom 1ns to 5ns and see what happens to the rate at which instructions are complete.

Example with memory ops.

export M5_PATH=/data/gem5-baseline

$M5_PATH/build/RISCV/gem5.opt --redirect-stdout --debug-file=trace.out --debug-start=0 --debug-end=60000 --outdir=Simple_m5out --debug-flags=Event,ExecAll,FmtFlag ./gem5-config/run_micro.py Simple SingleCycle ./benchmarks/array.riscv.bin --clock 1GHz

Analyze Trace 2: TimingCPU + Cache

export M5_PATH=/data/gem5-baseline

$M5_PATH/build/RISCV/gem5.opt --redirect-stdout --debug-file=trace.out --debug-start=0 --outdir=Simple_m5out --debug-flags=Event,ExecAll,FmtFlag ./gem5-config/run_micro.py Simple Slow ./benchmarks/array.riscv.bin --clock 1GHz

Analyze Trace 3: MinorCPU + Multi-cycle ALU

As we have seen, operations can take multiple cycles to complete in the Minor model CPU. In this section, we will change the integer ALU operations latency to a single cycle and see how it affects the performance.

Default Minor 4 CPU with Multi-cycle ALU

Using the following commands, we will obtain traces and statistics for running two of the microbenchmarks array.riscv.bin and hello.riscv.bin using the multi-cycle ALU operations. You can also tweak the number of cycles taken for different operations to complete and see how they affect the performance.

export M5_PATH=/data/gem5-baseline

$M5_PATH/build/RISCV/gem5.opt --redirect-stdout --debug-file=trace.out --debug-start=0 --outdir=Minor4_m5out --debug-flags=Event,ExecAll,FmtFlag ./gem5-config/run_micro.py Minor4 SingleCycle ./benchmarks/array.riscv.bin --clock 1GHz

export M5_PATH=/data/gem5-baseline

$M5_PATH/build/RISCV/gem5.opt --redirect-stdout --debug-file=trace.out --debug-start=0 --outdir=Minor4_m5out --debug-flags=Event,ExecAll,FmtFlag ./gem5-config/run_micro.py Minor4 SingleCycle ./benchmarks/hello.riscv.bin --clock 1GHz

Minor 4 CPU with Single-cycle ALU

- Change the latency of integer operations

line 126inrun_micro.pyto 1 cycle and see how is the performance affected using the same two command in the previous subsection.

Check yourself

- Why does changing opLat affect the performance of hello.riscv and array.riscv differently?

Analyze Trace 4: OOO + Cache

export M5_PATH=/data/gem5-baseline

$ $M5_PATH/build/RISCV/gem5.opt --redirect-stdout --debug-file=trace.out --debug-start=0 --outdir=O3_m5out --debug-flags=Event,ExecAll,FmtFlag ./gem5-config/run_micro.py DefaultO3 Slow ./benchmarks/array.riscv.bin --clock 1GHz

To see all CPU options:

export M5_PATH=/data/gem5-baseline

# To see all CPU options

$M5_PATH/build/RISCV/gem5.opt ./gem5-config/run_micro.py --help

Check yourself

- What are the OOO processor models declared in run_micro.py ?

- What is the difference between O3_W256 O3_W2K, and DefaultO3?

Stats file

First, the statistics file contains general statistics about the execution:

The statistic dump begins with ---------- Begin Simulation Statistics ----------. There may be multiple of these in a single file if there are multiple statistic dumps during the gem5 execution. This is common for long running applications, or when restoring from checkpoints.

A couple of important statistics are sim_seconds which is the total simulated time for the simulation, sim_insts which is the number of instructions committed by the CPU, and host_inst_rate which tells you the performance of gem5.

Next, the SimObjects’ statistics are printed. For instance, the memory controller statistics. This has information like the bytes read by each component and the average bandwidth used by those components.

| Stat | # | Description |

|---|---|---|

| final_tick | 18446744073709551616 | Number of ticks from beginning of simulation (restored from checkpoints and never reset) |

| host_inst_rate | 402192 | Simulator instruction rate (inst/s) |

| sim_freq | 1000000000000 | Frequency of simulated ticks |

| sim_insts | 162530 | Number of instructions simulated |

| sim_ops | 296082 | Number of ops (including micro ops) simulated |

| sim_seconds | 18446744.073710 | Number of seconds simulated |

| sim_ticks | 18446744073709551616 | Number of ticks simulated |

| system.cpu.BranchMispred | 4133 | Number of branch mispredictions |

| system.cpu.Branches | 36480 | Number of branches fetched |

| system.cpu.committedInsts | 162530 | Number of instructions committed |

| system.cpu.committedOps | 296082 | Number of ops (including micro ops) committed |

Later in the file is the CPU statistics, which contains information on the number of syscalls, the number of branches, total committed instructions, etc.

| Stat | # | Description |

|---|---|---|

| system.cpu.num_cc_register_reads | 191583 | number of times the CC registers were read |

| system.cpu.num_cc_register_writes | 124014 | number of times the CC registers were written |

| system.cpu.num_conditional_control_insts | 30902 | number of instructions that are conditional controls |

| system.cpu.num_fp_alu_accesses | 5219 | Number of float alu accesses |

| system.cpu.num_fp_insts | 5219 | number of float instructions |

| system.cpu.num_fp_register_reads | 7182 | number of times the floating registers were read |

| system.cpu.num_fp_register_writes | 3645 | number of times the floating registers were written |

| system.cpu.num_func_calls | 1414 | number of times a function call or return occured |

| system.cpu.num_idle_cycles | -inf | Number of idle cycles |

| system.cpu.num_int_alu_accesses | 291751 | Number of integer alu accesses |

| system.cpu.num_int_insts | 291751 | number of integer instructions |

| system.cpu.num_int_register_reads | 560951 | number of times the integer registers were read |

| system.cpu.num_int_register_writes | 245957 | number of times the integer registers were written |

gdb gem5

- https://stackoverflow.com/questions/54890007/how-to-break-the-gem5-executable-in-gdb-at-a-the-nth-instruction

Acknowledgement

This document has put together by your CMPT 750/450 instructors with support from the gem5 community.