Possible Thesis Topics with Oliver Schulte (November 2025)

Most of my research is about machine learning for structured data, SQL, XML, network data, event logs, sports data, financial data. My main current interests are centred on two areas:- Agent-Based Modelling and Reinforcement Learning

- Neuro-symbolic Logic: combining logic and deep graph learning

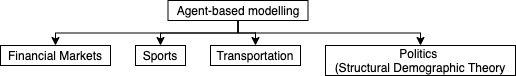

Agent-Based Modelling

An agent-based model is a generative model for time series data that result from the interactions of multiple (typically many) agents with an environment. Examples include finance, sports, transportation (even politics). In these domains, domain knowledge often makes it feasible to build a model of how the agents' environment functions. For example, we know the rules of a sport and we know how particular markets work. The main problem is modelling the behavior of the agents. The most common approach has been what I would call "agent simulation": define a priori a set of agent types (perhaps with parameters, e.g., "zero-intelligence agents"), then simulate how the interactions among these agents would develop in a given environment. A recent proposal is to connect agent simulation with reinforcement learning by introducing RL agents as a type of agent that use reinforcement learning to maximize their return. The project I envision is to learn agent types rather than pre-defining a set of types. Learning agent types would be an instance of what in reinforcement learning is called behavioral cloning. The research topic I would like to examine is to develop multi-agent behavioral cloning for financial data. I propose two concrete projects as first steps.

- In the sports domain, we developed a method for multi-agent behavioral cloning through learning agent representations (embeddings). Can we learn representations for agents in the financial domain? For example from blockchain data, can we learn embeddings for wallets?

- Learning value functions. A value function assesses the expected return of a decision taken at a given environment state (market state). Reinforcement learning makes extensive use of model-free methods for learning value functions, meaning that they directly estimate expected return without modelling or simulating the agent dynamics. You can compare this to modelling aggregate features of a gas like temperature without modelling individual molecules. Can we learn value functions for financial markets? An important variant would be to extend model-free learning to other quantities than return, such as variance or volatity. In sports we applied distributional RL to estimate the variability of returns, not only the expectation.

Assets and Criteria

-

Any background in

- deep reinforcement learning, especially multi-agent RL

- deep learning for time series (e.g., LSTM, diffusion models)

- game theory

Scientific Background

- Manifesto on agent-based modelling in capital markets. Simudyne is a company that has developed major tools and platforms for agent-based models in finance. They wrote a post summarizing their experience with several references to related literature.

- Michael Wellman on the implications of AI for financial markets. Short talk (15 minutes), gives references to his work on a number of topics, including the impact of algorithmic trading, system stability, large language models

- Using Agent-Based Models for Analyzing Threats to Financial StabilityAn older survey with many examples of agent-based modelling, especially from finance and economics, but also from transportation and social networks. No math. The traffic modelling example is short and especially pertinent because they based the agent model on observations of actual traffic behavior rather than pre-defined agent types.

- Gradient-Assisted Calibration for Financial Agent-Based Models. A recent piece from a top conference. Gives you an example of agent-based modelling in a simplified market. You need to have a strong background in deep learning, but if you do, it is a good example of how to apply deep learning for agent-based modelling.

- A Financial Market Simulation Environment for Trading Agents Using Deep Reinforcement Learning. A recent paper from a well-known group in a top conference. Develops an agent-based framework for simulating general markets. Introduces an RL agent as a possible agent type. Show that RL agents that keep learning to maximize their returns jointly approximate a market equilibrium; Michael Wellman refers to this approach as "empirical game theory" (I would call it game theory based on simulation).

Neuro-symbolic AI: Using logical rules to enhance graph learning.

This project combines logic-based knowledge representation with deep graph learning methods. A common logic-based knowledge representation formalism are if-then rules, for example "if a person works in a city, they probably live in that city too." How can we leverage a set of if-then rules to improve deep graph learning? I have developed an approach within a framework known as semantic loss, where the knowledge as a regularizer to define a new training objective for graph neural networks. My group is working on two tasks to evaluate this idea.- Generating graphs with a graph generative model (e.g., Variational Graph Auto-encoder, Graph Diffusion)

- Training graph neural networks. GNNs typically produce node embeddings for an input graph. They are usually evaluated on predictive tasks such as link prediction and node classification.

Assets and Criteria

- For SFU students: strong transcript, not necessarily in computer science (e.g., statistics is also related).

-

Any background in

- graph neural networks

- formal logic

Scientific Background

- Our workshop paper

- Henry Katz' Engelmore lecture paper

- Henry Katz' Engelmore lecture video

- Survey paper the third wave

- Baselines